LangChain 是一个用于开发由大型语言模型 (LLM) 驱动的应用程序的框架。 简单来说,它可以帮助你更轻松地构建利用 LLM(例如 OpenAI 的 GPT 模型、Google 的 PaLM 模型等)的应用程序。

LangChain官网:https://www.langchain.com/

实践

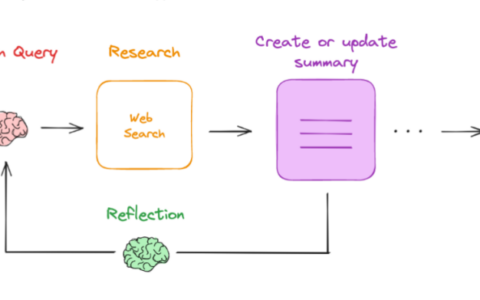

给大模型添加联网功能也就是添加一个搜索引擎。一种方式就是先经过搜索引擎再把信息传给大模型进行回答,另一种就是使用function calling + 搜索引擎API 让大模型自己决定是否需要使用联网功能。

而免费的搜索引擎指的是duckduckgo,可能效果上没有付费的搜索引擎比如必应api与谷歌api好就是了,但是单纯用于测试联网功能的实现那也足够了。

首先先简单过一遍langchain的使用。

接入OpenAI的大模型并使用非流式与流式响应:

from langchain.chat_models import init_chat_model

from langchain_core.messages import HumanMessage, SystemMessage

api_key = "你的api_key"

model = init_chat_model("gpt-4o-mini", model_provider="openai",api_key=api_key)

print(model.invoke([HumanMessage(content="你是谁?")]))

messages = [

HumanMessage("你是谁?"),

]

for token in model.stream(messages):

print(token.content, end="|")

再试一下接入国内的大模型并使用非流式与流式响应:

from langchain.chat_models import init_chat_model

from langchain_core.messages import HumanMessage

api_key = "你的api_key"

base_url = "https://api.siliconflow.cn/v1"

model = init_chat_model("Qwen/Qwen2.5-72B-Instruct", model_provider="openai",api_key=api_key,base_url=base_url)

print(model.invoke([HumanMessage(content="你是谁?")]))

messages = [

HumanMessage("你是谁?"),

]

for token in model.stream(messages):

print(token.content, end="|")

由于这个功能的实现,需要使用工具调用,现在来看看在langchain如何使用工具调用:

from langchain.chat_models import init_chat_model

from langchain_core.messages import HumanMessage

from langchain_core.tools import tool

from duckduckgo_search import DDGS

@tool

def add(a: int, b: int) -> int:

"""Adds a and b."""

return a + b

tools = [add]

api_key = "你的api_key"

base_url = "https://api.siliconflow.cn/v1"

model = init_chat_model("Qwen/Qwen2.5-72B-Instruct", model_provider="openai",api_key=api_key,base_url=base_url)

llm_with_tools = model.bind_tools(tools)

query = "1+3等于几"

messages = [HumanMessage(query)]

ai_msg = llm_with_tools.invoke(messages)

print(ai_msg.tool_calls)

messages.append(ai_msg)

for tool_call in ai_msg.tool_calls:

selected_tool = {"add": add}[tool_call["name"].lower()]

tool_msg = selected_tool.invoke(tool_call)

print(tool_msg)

messages.append(tool_msg)

print(llm_with_tools.invoke(messages).content)

现在来使用duckduckgo:

from duckduckgo_search import DDGS

query = "mingupup是谁?"

results = DDGS().text(query, max_results=10)

print(results)

将搜索引擎与工具调用结合在一起:

from langchain.chat_models import init_chat_model

from langchain_core.messages import HumanMessage

from langchain_core.tools import tool

from duckduckgo_search import DDGS

@tool

def add(a: int, b: int) -> int:

"""Adds a and b."""

return a + b

@tool

def get_web_data(query:str) -> str:

"""使用DuckDuckGo搜索引擎进行搜索"""

results = DDGS().text(query, max_results=10)

return results

tools = [add,get_web_data]

api_key = "你的api_key"

base_url = "https://api.siliconflow.cn/v1"

model = init_chat_model("Qwen/Qwen2.5-72B-Instruct", model_provider="openai",api_key=api_key,base_url=base_url)

llm_with_tools = model.bind_tools(tools)

query = "mingupup是谁?"

messages = [HumanMessage(query)]

ai_msg = llm_with_tools.invoke(messages)

print(ai_msg.tool_calls)

messages.append(ai_msg)

for tool_call in ai_msg.tool_calls:

selected_tool = {"add": add,"get_web_data":get_web_data}[tool_call["name"].lower()]

tool_msg = selected_tool.invoke(tool_call)

print(tool_msg)

messages.append(tool_msg)

print(llm_with_tools.invoke(messages).content)

以上就快速实现了简单的联网功能,如果你还想快速创建一个对话应用来测试联网功能,可以使用chainlit:

import chainlit as cl

from datetime import datetime

from langchain.chat_models import init_chat_model

from langchain_core.messages import HumanMessage

from langchain_core.tools import tool

from duckduckgo_search import DDGS

#@cl.step(type="tool") # type: ignore

@tool

def get_time() -> str:

"""获取当前时间"""

# 获取当前时间

now = datetime.now()

# 格式化时间为指定的字符串格式

formatted_time = now.strftime("当前时间是:%Y年%m月%d日 %H:%M。")

return formatted_time

#@cl.step(type="tool") # type: ignore

@tool

def get_weather(city: str) -> str:

"""获取城市天气"""

returnf" {city} 天气晴,25度。"

#@cl.step(type="tool") # type: ignore

@tool

def get_web_data(query:str) -> str:

"""使用DuckDuckGo搜索引擎进行搜索"""

results = DDGS().text(query, max_results=10)

return results

tools = [get_time,get_weather,get_web_data]

api_key = "你的api_key"

base_url = "https://api.siliconflow.cn/v1"

model = init_chat_model("Qwen/Qwen2.5-72B-Instruct", model_provider="openai",api_key=api_key,base_url=base_url)

llm_with_tools = model.bind_tools(tools)

@cl.on_chat_start

def on_chat_start():

print("A new chat session has started!")

# Set the assistant agent in the user session.

@cl.on_message

asyncdef on_message(message: cl.Message):

response = cl.Message(content="")

messages = [HumanMessage(message.content)]

ai_msg = llm_with_tools.invoke(messages)

print(ai_msg.tool_calls)

messages.append(ai_msg)

for tool_call in ai_msg.tool_calls:

selected_tool = {"get_time": get_time,"get_weather": get_weather,"get_web_data":get_web_data}[tool_call["name"].lower()]

tool_msg = selected_tool.invoke(tool_call)

print(tool_msg)

messages.append(tool_msg)

for token in llm_with_tools.stream(messages):

await response.stream_token(token.content)

await response.send()

@cl.on_stop

def on_stop():

print("The user wants to stop the task!")

@cl.on_chat_end

def on_chat_end():

print("The user disconnected!")输入chainlit run 文件名 即可运行:

转载作品,原作者:小铭同学的AI工具学习记录,文章来源:https://mp.weixin.qq.com/s/U_yzoa9tNfdN9MdyIdE6Og

微信赞赏

微信赞赏  支付宝赞赏

支付宝赞赏